A Deep Dive into the Web Speech API

In today's digital landscape, how we interact with technology continues to evolve beyond traditional keyboard and mouse inputs. Voice-based interactions have become increasingly important, offering more natural, accessible, and efficient ways to engage with applications. The Web Speech API is part of this revolution, a powerful yet underutilized tool that can dramatically enhance how users experience web applications.

What Is the Web Speech API, and Why Should You Care?

The Web Speech API is a JavaScript interface that brings voice capabilities directly to web browsers, eliminating the need for third-party plugins or services. This powerful API consists of two main components:

- Speech Recognition: Captures spoken words and converts them into text (speech-to-text)

- Speech Synthesis: Transforms text into natural-sounding speech (text-to-speech)

What makes this technology truly remarkable is how it seamlessly integrates these voice capabilities into standard web technologies. This means developers can create voice-enabled web experiences without requiring users to install additional software or learn new interfaces.

Speech Recognition

The SpeechRecognition interface is at the core of the Web Speech API's speech recognition functionality. It allows developers to capture and process spoken language.

How Speech Recognition Works

The speech recognition process follows this flow:

- Your application initializes the SpeechRecognition interface

- The user speaks into their device's microphone

- The API processes the audio by sending it to a web service for recognition

- The speech is converted into text that your application can use

What's particularly powerful is how you can customize this experience through various properties and events, as shown in the following example implementation.

Each method and property is carefully documented so you can easily follow:

// Advanced Speech-to-Text Demo using Web Speech API

// Create a new instance of SpeechRecognition

const recognition = new (window.SpeechRecognition || window.webkitSpeechRecognition)();

// Set properties

recognition.lang = 'en-US';

// Enable interim results for real-time feedback

recognition.interimResults = true;

// Get multiple alternative transcripts

recognition.maxAlternatives = 5;

// Event handler for when a result is returned

recognition.onresult = (event) => {

let interimTranscript = '';

let finalTranscript = '';

// Access what the user said through event.results

for (let i = event.resultIndex; i < event.results.length; i++) {

if (event.results[i].isFinal) {

finalTranscript += event.results[i][0].transcript;

console.log('Final transcript: ', finalTranscript);

} else {

interimTranscript += event.results[i][0].transcript;

}

// The confidence score helps you understand how certain the API is

const confidence = event.results[i][0].confidence;

console.log(`Confidence level: ${confidence * 100}%`);

}

console.log('Interim transcript: ', interimTranscript);

};

// Event handler for when recognition starts

recognition.onstart = () => {

console.log('Speech recognition service has started');

};

// Event handler for when recognition ends

recognition.onend = () => {

console.log('Speech recognition service has ended');

};

// Event handler for errors

recognition.onerror = (event) => {

console.error('Speech recognition error detected: ' + event.error);

};

// Event handler for audio start

recognition.onaudiostart = () => {

console.log('Audio capturing started');

};

// Event handler for audio end

recognition.onaudioend = () => {

console.log('Audio capturing ended');

};

// Event handler for speech start

recognition.onspeechstart = () => {

console.log('Speech has been detected');

};

// Event handler for speech end

recognition.onspeechend = () => {

console.log('Speech has stopped being detected');

};

// Start recognition

recognition.start();The interimResults property is particularly valuable for creating responsive interfaces, as it provides real-time feedback while the user is still speaking – similar to how modern voice assistants show text as you speak.

Meanwhile, maxAlternatives lets you handle ambiguity by receiving multiple possible interpretations of what was said.

SpeechRecognitionEvent Data

The SpeechRecognitionEvent object provides the following information:

results[i]: An array containing result objects from the speech recognition process, with each element representing a recognized word.resultIndex: The index of the current recognition result.results[i][j]: The j-th alternative for a recognized word, with the first element being the most likely word.results[i].isFinal: A Boolean value that indicates whether the result is final or interim.results[i][j].transcript: The text representation of the recognized word.results[i][j].confidence: The confidence level of the recognition result, with a value range from 0 to 1.

Recognition Accuracy

Factors like accents, background noise, and technical vocabulary can impact recognition accuracy. These challenges can be mitigated by:

- Implementing confidence thresholds (using the confidence score)

- Offering alternative matches when confidence is low

- Providing visual feedback of recognized speech so users can verify the accuracy

Internet Dependency

On Chrome and Safari, speech recognition relies on server-based processing, meaning it won't work offline. This is a significant consideration for mobile users in areas with poor connectivity.

Also worth mentioning is that speech recognition server-side requests from Chrome/Safari don't appear in the developer tools network tab.

Additional Permissions on macOS

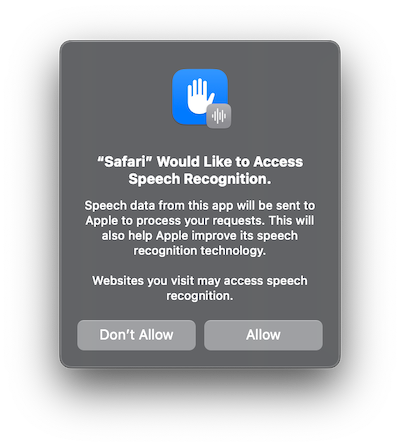

When accessing any app using the Web Speech API's speech recognition interface, Safari will notify the user with the "Access Speech Recognition" modal, which asks for permission to send the audio data to Apple to process the request.

On macOS, this setting can be changed at will by going to System Settings -> Speech Recognition.

Speech Recognition Demo with Real-time Captions and Subtitles

Using Web Speech API, we've implemented a fully working demo for speech recognition, which records the camera and microphone and produces real-time captions and subtitles on playback.

It has the following features:

- Real-time captions while recording camera

- Multi-language captions

- Generated

.rtt,.srtand.JSONfiles with the resulting transcription after a recording stops - Subtitle file generated and applied for the video playback

The complete implementation code is available on GitHub.

Speech Synthesis

The SpeechSynthesis interface is a crucial part of the Web Speech API's text-to-speech functionality. It allows developers to build web applications that speak to their users.

Crafting the Perfect Voice

With this part of the Web Speech API, you can directly control every aspect of the generated speech, as seen in the following example.

Each method and property is carefully documented so you can easily follow:

// Text-to-Speech Demo using Web Speech API

// Select the text you want to convert to speech

const text = "Hello, welcome to the advanced Web Speech API demo!";

// Create a new instance of SpeechSynthesisUtterance

const utterance = new SpeechSynthesisUtterance(text);

// Customize the voice characteristics

// Language and dialect

utterance.lang = 'en-US';

// Higher pitch than default

utterance.pitch = 1.2;

// Slightly slower than normal speech

utterance.rate = 0.9;

// Full volume

utterance.volume = 1;

// Event handler for when the speech starts

utterance.onstart = () => {

console.log('Speech synthesis has started');

};

// Event handler for when the speech ends

utterance.onend = () => {

console.log('Speech synthesis has ended');

};

// Event handler for when there's an error

utterance.onerror = (event) => {

console.error('Speech synthesis error detected: ' + event.error);

};

// Event handler for when a named mark is reached

utterance.onmark = (event) => {

console.log(`Reached the mark: ${event.name} at charIndex: ${event.charIndex}`);

};

// Set a specific voice from the 176 currently available on Chrome

const voices = window.speechSynthesis.getVoices();

const selectedVoice = voices.find(voice => voice.name === 'Google US English');

if (selectedVoice) {

utterance.voice = selectedVoice;

}

// Add a mark to the utterance

utterance.addEventListener('boundary', (event) => {

if (event.name === 'mark') {

console.log(`Reached mark: ${event.name} at charIndex: ${event.charIndex}`);

}

});

// Speak the text

window.speechSynthesis.speak(utterance);

Events in SpeechSynthesisUtterance

onstart: Triggered when the speech synthesis starts.onend: Triggered when the speech synthesis finishes.onerror: Triggered when an error occurs during speech synthesis.onpause: Triggered when the speech synthesis is paused.onresume: Triggered when the speech synthesis resumes after being paused.onmark: Triggered when the spoken utterance reaches a named SSML "mark" tag.onboundary: Triggered when the spoken utterance reaches a word or sentence boundary.

What makes this particularly powerful is the ability to create natural-sounding interactions through careful pitch, speed, and voice selection adjustments. You can create a voice that aligns perfectly with your brand identity – professional, friendly, authoritative, or playful – enhancing the overall user experience.

Use Cases

The applications of the Web Speech API extend far beyond novelty features. Here's how voice technology is revolutionizing various sectors:

Voice-Controlled Applications

Enable users to control smart home devices or virtual assistants using voice commands.

Accessibility Features

For users with visual impairments, motor disabilities, or reading difficulties, voice interfaces aren't just convenient – they're essential. By implementing speech recognition and synthesis, you make your web applications accessible to millions who might otherwise struggle with traditional interfaces.

Educational Transformation

Language learning applications can utilize speech recognition to provide immediate pronunciation feedback. Mathematics and science platforms can verbally walk students through complex problem-solving steps.

Interactive Gaming

Incorporate voice commands in games to enhance user experience.

Hands-Free Navigation

Allow users to navigate applications without using their hands, which is ideal for scenarios where hands-free operation is necessary.

Browser Compatibility

Browser support varies, with Chrome offering the most comprehensive implementation. However, this is quickly addressed through feature detection:

if ('SpeechRecognition' in window || 'webkitSpeechRecognition' in window) {

// Speech recognition is supported

const recognition = new (window.SpeechRecognition || window.webkitSpeechRecognition)();

// Continue with implementation

} else {

// Provide alternative interface

console.log('Speech recognition not supported - offering text-based alternative');

}Desktop support for major browsers:

| Browser | Speech Synthesis Support | Speech Recognition Support |

|---|---|---|

| Chrome | Full (v33+) | Full (v33+) |

| Edge | Full (v14+) | Full (v79+) |

| Firefox | Full (v49+) | Not Supported |

| Safari | Full (v7+) | Partial (v14.1+) |

Mobile support for major browsers:

| Browser | Speech Synthesis Support | Speech Recognition Support |

|---|---|---|

| Chrome for Android | Full (v134) | Partial (v134) |

| Safari on iOS | Full (v7+) | Partial (v14.5+) |

| Firefox for Android | Full (v136+) | Not Supported |

Conclusion: The Future Is Speaking

Voice technology isn't just the future – it's already transforming how we interact with digital experiences today. By implementing the Web Speech API, you're not only staying ahead of technological trends but also creating more intuitive, accessible, and engaging user experiences.

Whether you're building educational tools, e-commerce platforms, productivity applications, or content websites, voice capabilities can differentiate your offering and provide genuine value to users. The Web Speech API makes these powerful features accessible to web developers without requiring specialized voice recognition or natural language processing expertise.

References

For more information, visit the official Web Speech API MDN Web Docs.