Way Better Storage Logs In The Pipe Account Area

One of the lower priority items we worked on when we added the ability to push the recordings to your preferred storage was showing the logs created in the process.

Given the development time constraints at that time, we’ve decided to just show the logs for the last 100 attempts and in the case of an issue just manually go to the database and extract all the relevant logs.

That changed last week.

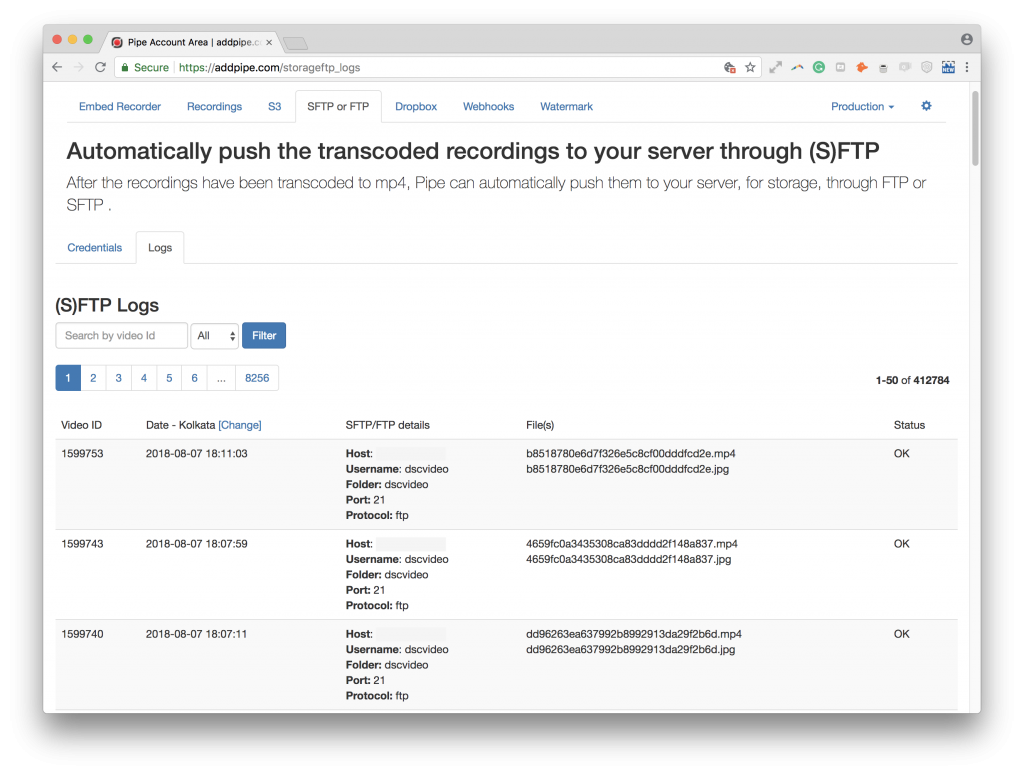

We’ve massively improved the logs system in the Pipe account area:

- You now have immediate access to all the logs for an environment/account and they’re easily browsable through pagination

- You can filter by recording id so that you can quickly find and track all our attempts to push to your storage a certain recording

- You can filter by type (successful or not) to help you identify problems when you’re processing thousands of recordings per day

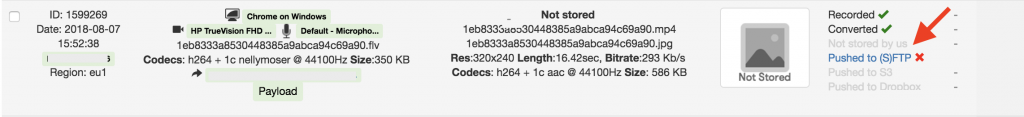

- We’ve also linked from the list of recordings directly to the S3/(S)FTP/Dropbox logs for each recording

- Each status code is explained in detail in a legend section at the bottom

With the above changes:

- you have immediate access to all the information we have when debugging a push to the storage issue

- you have a much better image of the performance of your storage solution

- you have a much better image of how many times and when we attempt to push the recordings to your storage

In case of a failed push attempt, we now retry every 2 minutes for 3 days. That results in 2160 retries over 3 days and just as many log entries. We’ll soon move on to a system where we attempt to push at increasingly higher intervals over 3 days and even stop in some cases like when the FTP credentials are incorrect (FTP_LOGIN_FAILED).